In recent years, the scale, performance, and speed of quantum computers have significantly improved. Despite these advancements, many technical challenges still need to be overcome before these computers can be implemented on a larger scale.

For instance, before existing quantum algorithms can be implemented at high speeds, engineers must develop fault-tolerant and reliable quantum processors. This summer, researchers at IBM announced error mitigation techniques to help move toward this goal.

All About Circuits interviewed Sarah Sheldon, senior manager of the theory and capabilities team at IBM Quantum, to learn more about these advancements. Sheldon’s team specializes in developing algorithms that can bring the performance of quantum computers above that of classical computers, as well as tools for optimizing the performance of quantum systems.

Sarah Sheldon, the senior manager of theory and capabilities at IBM Quantum.

Probabilistic Error Cancelation and Zero-noise Extrapolation

The new error mitigation tools introduced by Sheldon and her colleagues include probabilistic error cancelation (PEC) and zero-noise extrapolation (ZNE). These techniques are designed to trade off the speed at which a task is completed to achieve better accuracy.

“The error mitigation techniques that we are developing really exist on top of the hardware, but they depend strongly on being able to learn the noise from the device,” said Sheldon. “Error mitigation also is very linked to hardware quality, as improvements in hardware dramatically reduce the overhead required by these techniques.”

PEC and ZNE are advanced techniques that resulted from the extensive quantum computing research conducted at IBM Quantum over the past few years.

“For PEC, we learn the noise in a circuit layer and implement circuit instances that on average invert the effect of the noise,” Sheldon explained. “In ZNE, on the other hand, we run the circuit as is and then recalibrate the circuit with all of the microwave controls slowed down to effectively increase the noise and run it again. When we know how the noise scales with the duration of the control pulses, we can extrapolate from the outcomes of these different circuits to the zero-noise limit.”

Striving Toward Fault-tolerant Operation

The term fault tolerance refers to the ability to run a quantum circuit and correct errors as they occur to attain accurate results on a shot-by-shot basis. For engineers to achieve fault-tolerant operation of quantum computers, they first need to devise error correction tools that use many physical qubits to encode a single logical qubit.

“There is additional overhead for an error-corrected architecture to implement a universal set of logical gates,” Sheldon said. “What PEC shows is that we do not have to wait for fault tolerance to do useful quantum computing. PEC allows us to reduce the effect of noise (i.e., increase the accuracy) on expectation value measurements.”

Expectation values are essentially an average of all the possible outcomes of a measurement weighted by their likelihood. These values are relevant to a broad range of research and real-world problems, the most blatant of which is quantum system simulations.

While fault tolerance is IBM's ultimate goal, IBM Quantum is targeting error mitigation as the path to push quantum computing to usefulness. Image (modified) used courtesy of IBM

PEC can only mitigate noise-affecting expectation values by first identifying the noise impacting a quantum system, and this is what the researchers first tried to achieve.

“Once we know the noise in the system, we undo its effects by adding in extra gates into our circuit and averaging over many instances of such circuits—so on average these additional gates apply the inverse of the underlying noise,” Sheldon said. “To get accurate values, we have to average enough times to reconstruct this inverse. This means we have a sampling overhead for measuring unbiased estimators with small enough variance.”

Reducing Circuit Noise and the "Gammabar"

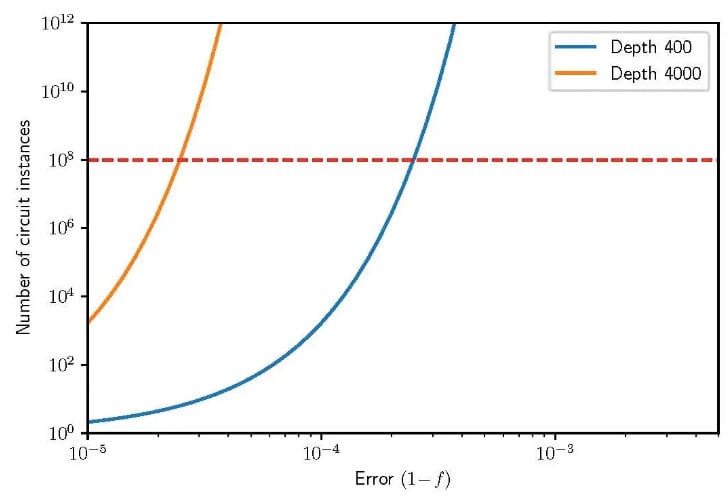

The PEC technique has a sampling overhead factor, which the researchers refer to as gammabar. Because this factor depends on the overall noise of a circuit, reductions of noise in a system (either by improving devices or incorporating error correction techniques) will bring the gammabar increasingly closer to 1.

To produce their noise-free expectation values when the gammabar is greater than 1, the researchers need to average it over many circuit instances. This is known as an exponential sampling overhead.

This diagram depicts an estimation of PEC circuit overhead for 100 qubit trotterization circuits of depth 400 and 4000, including the time evolution of an Ising spin chain. The dotted red line shows one day of runtime assuming a fixed 1 kHz sampling rate. Image used courtesy of IBM

“The larger the circuit that we want to implement, the larger this sampling overhead is, but the good news is that the overhead is only weakly exponential and it comes down exponentially with lower gammabar,” Sheldon said. “Our path to improving quality will always focus on coherence and gate fidelity. We have now measured coherence times over 1ms, and with our new gate architecture in our Falcon R10 devices, we’ve surpassed 99.9% two-qubit gate fidelity. Integrating these advances into our largest devices will be critical for going to larger circuit sizes."

The Path Toward Larger Circuits and Operable Quantum Computers

Along with improving quality, increasing the speed at which circuits run can also yield larger circuits. Further, averaging more circuit instances in a reasonable amount of time can produce a higher sampling overhead that comes with larger circuits.

“We also care that our systems are stable for long periods of time so that the noise doesn't change from the time we learn it to the time we mitigate it,” Sheldon said. “Currently, we find it sufficient to calibrate our devices once a day, but we need more research to analyze the effect of any drift on the accuracy of mitigated results."

The advances in the quality and speed of quantum systems enabled by the PEC technique and other error mitigation strategies introduced by Sheldon and her colleagues could be an important milestone in the journey toward quantum advantage. By tracking the gammabar factor, the researchers will be able to determine the size of a circuit they can run and thus the scale of the problems they can solve with their systems.

IBM Unlocks "Dynamic Circuit Capabilities"

Sheldon and her colleagues are now developing error mitigation techniques that could reduce the sampling overhead. In addition, they are now starting to unlock so-called dynamic circuit capabilities.

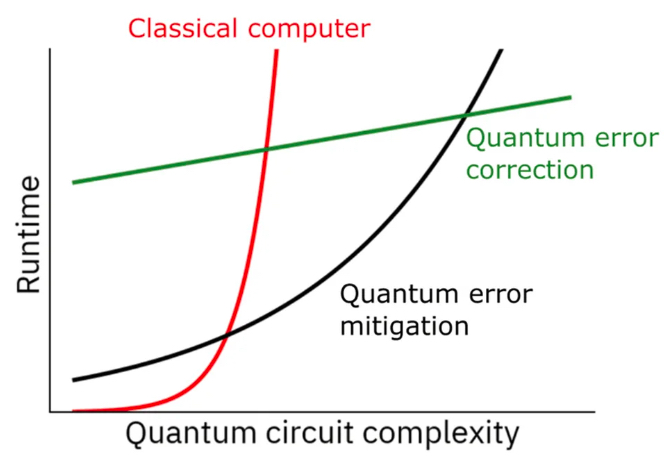

“With dynamic circuits, we can use measurement and feed-forward operations that will give more algorithmic flexibility, allow us to prepare entangled states in shorter depth, and investigate components of quantum error correction,” Sheldon said. “Ultimately, the future of quantum computing tools is problem-dependent. Considering just the hardness of the circuits, it’s a question of when the exponential overhead of error mitigated circuits will beat the exponential scaling of classical simulations.”

Chart showing quantum runtime as a function of quantum circuit complexity for classical computers, quantum computers with error correction, and quantum computers with error mitigation. Image used courtesy of IBM

In their future research, the team at IBM Quantum will also try to identify problems that map well onto their circuits to ensure that their quantum computers can solve them faster and more accurately than classical computers. Sheldon thinks that these problems, which will become apparent through collaborations with industry and academic partners, will initially be rooted in the fields of chemistry, materials science, and machine learning.

“Our collaborations are primarily at the higher levels of the stack—in software integrations and applications development,” Sheldon said. “Where we really seek partner input is in understanding how and for what problems they want to use quantum computers. To that end, we have partnerships with universities, national labs, startups, and companies across many fields of science and industry.”

Quantum Computers Under Development Are Still Useful

Sheldon believes that quantum computers could prove useful even before they attain fault tolerance. With the hardware tools and error mitigation strategies developed at IBM, these computers could soon be implemented in many academic and research settings.

“At IBM, we see error mitigation and error correction as existing on a continuum. Error mitigation will be directly impactful in the near term and eventually error correction will be necessary for fault tolerance,” Sheldon noted. “In between, error correction and error mitigation will work together to continue to access harder problems."

Sheldon continued, "In one of our recent works, we showed one example of how this could look, with error-corrected Clifford gates and mitigated t-gates. We’re at a stage in hardware development and we have the methods to produce noise-free expectation values, so now we have to ask the question: what can we do with these systems?”