The rise of artificial intelligence (AI) has ushered in a completely new era in computing, one where parallelism reigns king. In the beginning, the graphics processing unit (GPU) was the dominant processing unit for these applications; however, even GPUs can be too general-purpose to squeeze out maximum performance and energy efficiency for ML-specific tasks.

The new Esperanto ET-SoC-1. Image used courtesy of Esperanto

The need for AI-specific chips, ones that deliver massive parallelism and ML-tailored workflows and optimal power consumptions, has led to many startups. One example is Esperanto, which has recently shaken up this space with its new RISC-V-based, 1000 core accelerator. This accelerator claims to outperforms some formidable opponents.

This article will discuss what is known about the new SoC and see how it stacks up against the competition.

Esperanto’s New Chip: Looking at the Cores

At this year's Hot Chips 33 conference, one of the major headlines came from California-based company Esperanto with its new AI accelerator.

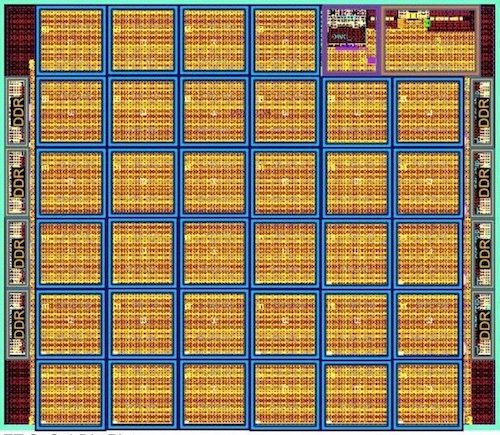

This new chip, the ET-SoC-1, was built for data center inference to offer high levels of parallelism while offering maximum energy efficiency. To this end, the new chip integrates over 1000 RISC-V cores, 160 million bytes of SRAM, and over 24 billion transistors onto its SoC built on TSMC's 7nm process. The cores for this SoC were designed based on the RISC-V ISA: the ET-Minion and ET-Maxion.

ET-SoC-1 die plot. Image used courtesy of Esperanto

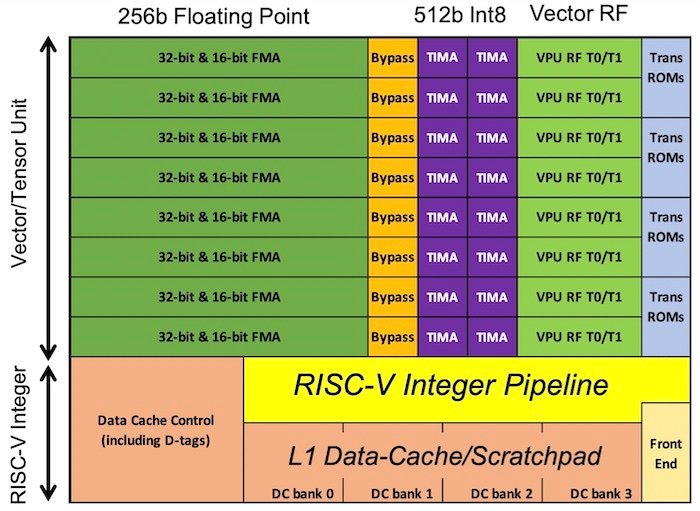

The ET-Minion is a general-purpose, 64-bit in-order core that features proprietary extensions for machine learning, including vector and tensor operations on up to 256 bits of floating-point numbers per clock period. The new SoC features 1088 of these cores.

The architecture of the ET-Minion core. Image used courtesy of Esperanto

The ET-Maxion is the company's proprietary 64-bit single-thread core for high performance, which features quad-issue out-of-order execution, branch prediction, and prefetching algorithms. The SoC features 4 of these cores tied by a fully coherent cache.

Now that the core technology is understood, let's dive into this AI solution's performance.

ET-SoC-1's Performance

When it comes to performance, Esperanto aimed to hit a sweet spot between compute and energy efficiency.

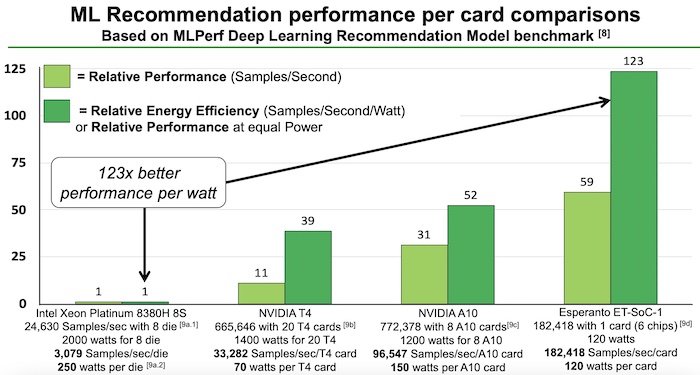

The SoC's performance per watt varies based on various software-controlled settings, including clock frequencies; however, the company claims its best performance comes at 1 GHz speeds. While operating at ~0.4V and a 1 GHz set point, the chip is reported to perform between 100 to 200 TOPS at under 20 watts, or roughly 10 TOPS per watt.

Esperanto’s benchmarking showed it to have better performance and energy efficiency than close competitors. Image used courtesy of Esperanto

In a benchmark, Esperanto found that a 6-chip, 6000 core accelerator card setup beat the competition while running MLPerf deep learning recommendation models. According to this benchmarking, its card offers better relative performance and performance per watt than a comparable Intel Xeon setup, an NVIDIA T4 setup, and an NVIDIA A10 setup.

While benchmarking can sometimes be misleading, the company does feel confident that its SoC could outperform many of its closest competitors.

With its promising core architecture and potential performance benchmarking, it will be interesting to see where Esperanto heads next.

What’s Next?

Esperanto built this SoC specifically for low-power data center applications and even more specifically for advertisement-facing recommendation ML workloads like those used by social media companies. Even with that in mind, Esperanto aimed to design its chip to be general-purpose as well, where designers can use a six-chip accelerator card (6000+ cores) to tackle most tasks it faces.

If its chip turns out to be as good as the company claims, it seems likely that it will find itself in data centers in the near term future for both ML and generic tasks alike.