Artificial intelligence (AI) and machine learning (ML) rank among the most significant paradigm shifts in the computing industry in the past decade. Yet, while computing tasks have altered drastically, most of the hardware we use for AI/ML has been unable to keep up.

Today, there is a more considerable emphasis on hardware acceleration to support the needs of AI/ML computing. With just that in mind,this week, IBM announced the release of its artificial intelligence unit (AIU) system-on-chip (SoC) ASIC. The device is designed to run and train deep learning models significantly faster and more efficiently than CPUs.

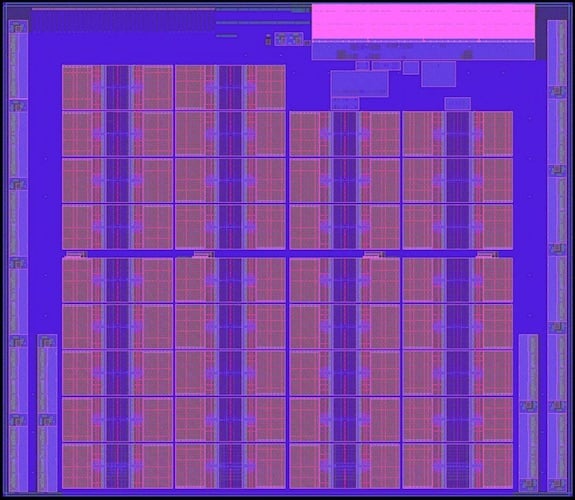

IBM AIU SoC ASIC layout. Image used courtesy of IBM

In this article, we’ll look at some of the underlying principles that guided the design of the AIU chip and details of the AIU itself.

Hardware for AI: Approximate Computing

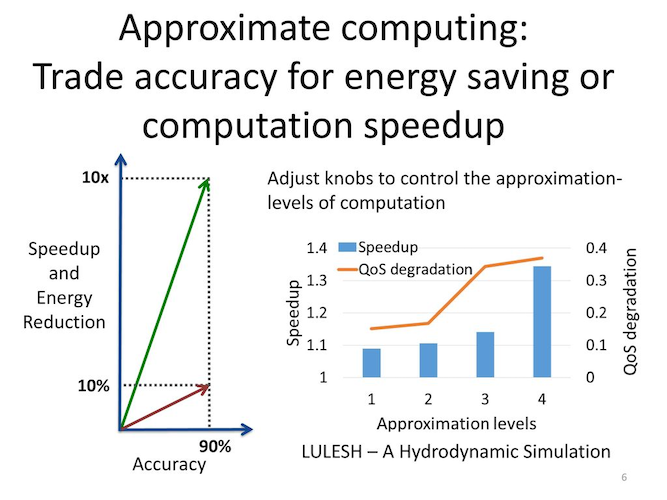

According to IBM, one of the leading principles in the design of their AIU was the concept of approximate computing. The main concept behind approximate computing is that, depending on the computing application, the necessary granularity of the data will vary tremendously.

For example, a physics simulation for determining the landing trajectory of an aircraft is an extremely detailed and important calculation. As such, it will require high levels of granularity and will likely require data types such as 64 or 32-bit floating points to represent data to the nth decimal point.

Approximate computing tradeoffs. Image used courtesy of Mitra and coauthors

Machine learning, on the other hand, requires a significantly decreased granularity, as it is more concerned with prediction and estimation. For example, when making a prediction, the difference between a 90% probability and a 90.1% probability is relatively negligible. Hence, ML can be performed with a reduced granularity, such as an integer representation, without losing performance.

With a smaller data type, machine learning architectures can handle less data, resulting in less power consumption from data movement, less physical memory requirements, and faster computing of ML calculations.

Hardware for AI: Architecture

Aside from approximate computing, one of the guiding principles in IBM’s design of their AIU was shaping the chip's architecture to be fit for AI/ML.

In general, processing units like CPUs are meant to be general-purpose computing platforms. For this reason, their layout and architecture are never necessarily streamlined for a specific computing task, leading to more complex designs.

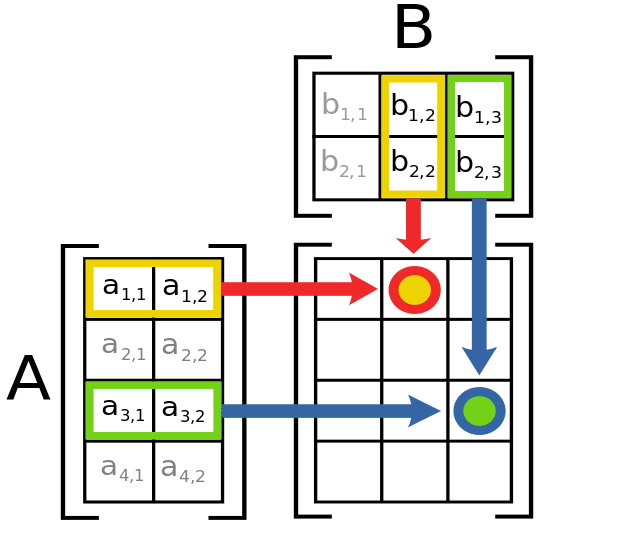

AI/ML relies heavily on matrix arithmetic. Image used courtesy of Machine Learning Mastery

Instead, AI/ML computing hardware really only needs to be optimized for matrix and vector multiplication. That’s because those are the most standard building blocks of AI calculation.

With all that in mind, IBM claims to have designed its AIU to be streamlined for these AI workflows, resulting in a simpler layout than a multipurpose CPU. The benefit of this, according to IBM, is the ability for data to be sent directly from one compute engine to the next, resulting in minimized latency and power consumption in the chip.

IBM’s AIU SoC Solution

This week, IBM announced the release of its AIU, the company’s first complete SoC solution for running and training deep learning models.

Built following the aforementioned design principles, the IBM AIU is designed specifically for deep learning, with tasks ranging anywhere from image classification to keyword detection. The system is not from scratch but is instead a scaled-up version of the AI engine that is built into the company’s Telum chip, which was released in August of 2021.

IBM’s AIU-based graphics card. Image used courtesy of IBM

Within this, the system consists of 32 processing cores and leverages a 5 nm process node to fit 23 billion transistors onto the SoC. For ease of use and compatibility with other systems, the AIU is designed into a graphics card that can be easily plugged into any computer or server with a PCIe slot. So far, specifics about the AIU’s performance and cost are unknown.