NVIDIA has long been considered a leader in the computing industry since it introduced the graphic processing unit (GPU) in 1999. Fast forward to today, the company can still be considered a pioneer in computing by constantly setting the bar with new technologies, standards, and products.

This year, at its annual GTC Conference, NVIDIA continued this innovation trend by releasing a plethora of new technologies. Highlighted amongst these was its new Hopper architecture.

With that in mind, this article aims to discuss the new architecture, the underlying technologies, and how it’s poised to change the industry.

The Hopper Architecture: Transformer Engine

Arguably, one of the most noteworthy releases from NVIDIA at this year’s GTC Conference was its new Hopper architecture. Designed to support HPC and large-scale AI/ML applications, the Hopper architecture leverages five “groundbreaking innovations.”

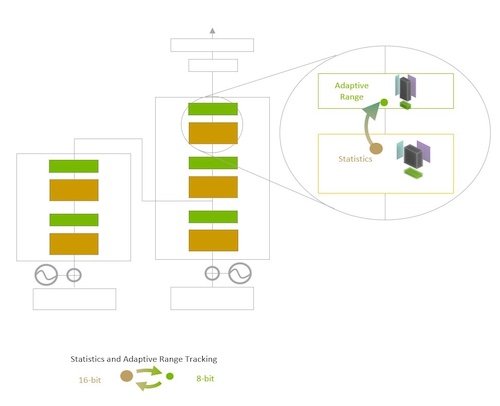

The Transformer Engine uses per-layer statistics to choose between FP16 and FP8 for each layer. Image used courtesy of NVIDIA

The first of these innovations aim to advance NVIDIA’s Tensor Core technology with a new Transformer Engine.

The Transformer Engine combines software and proprietary NVIDIA Hopper Tensor Core technology to enable Hopper Tensor Cores to apply mixed FP8 and FP16 formats during AI calculations.

The custom NVIDIA software allows the engine to automatically handle re-casting and scaling between these two precisions for each model layer, allowing the engine to balance accuracy (with higher precision FP16) with the throughput improvements of FP8.

By intelligently combining the high-speed performance of FP8 calculations with higher precision, the Hopper Architecture claims to achieve 30x higher throughput than NVIDIA A100.

NVLink Switch System and Confidential Compute

Following the new Transformer Engine, the next two new technologies in the Hopper architecture were the new NVLink Switch System and NVIDIA Confidential Computing.

The NVLink Switch System is a new GPU to GPU communication scheme combining the fourth generation NVLink interconnect and the new external NVLink Switch.

The NVLink Switch is a new rack switch designed for high bandwidth and low latency and offers 128 NVLink ports with a non-blocking switching capacity of 25.6 TB/s. Together, this system states it can connect up to 256 GPUs while achieving communication across servers at a bi-directional rate of 900 GB/s, which is over 7x the bandwidth of PCIe Gen5.

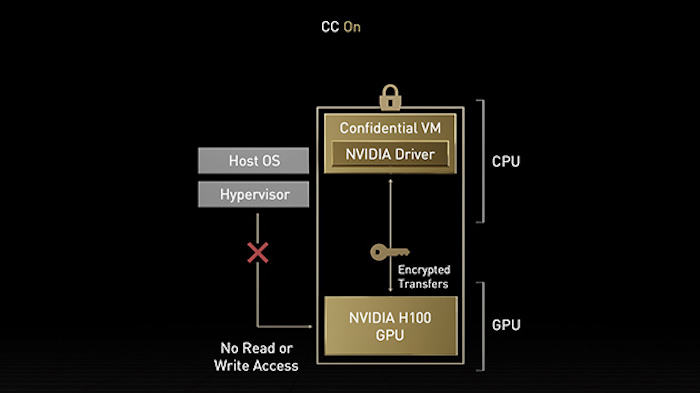

Confidential Computing prevents unauthorized access by entities such as the hypervisor or host OS during computation. Image used courtesy of NVIDIA

On the other hand, NVIDIA Confidential Computing is a new technology developed by NVIDIA to protect data while it’s being processed, as opposed to just at rest or in transit. Confidential computing achieves this through hardware security and isolation, protection from unauthorized access, verifiability with device attestation.

According to NVIDIA, Hopper represents the world’s first accelerated computing platform with confidential computing capabilities.

Second Gen MIG and DPX Instructions

The final two technologies that power the Hopper Architecture are a second-generation multi-instance GPU (MIG) and dynamic programming (DPX) instructions.

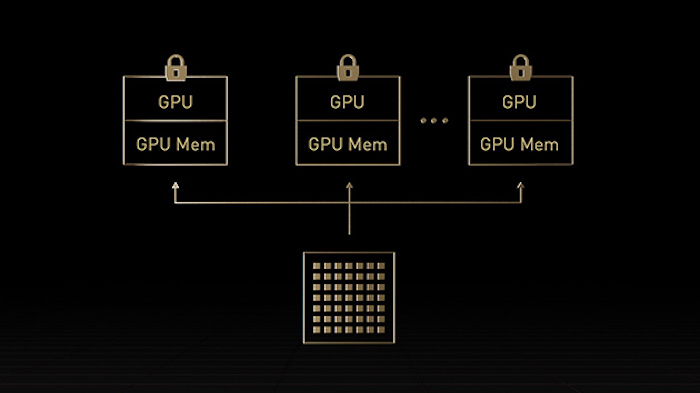

NVIDIA’s second-generation MIG is a technology that allows for the virtualization of GPUs, partitioning the device into several smaller, isolated GPU instances. Within these, each partition contains its memory, cache, and compute hardware.

New to the Hopper Architecture, the second generation MIG introduces support for features such as multi-tenant, multi-user configurations, allowing for virtualization of up to 7 GPU instances simultaneously.

Importantly, each instance is securely isolated thanks to Confidential Computing. This technology allows for resource allocation, optimization, and security in cloud environments.

Second-generation MIG allows for the virtualization of up to 7 GPU instances. Image used courtesy of NVIDIA

Finally, NVIDIA has introduced DPX instructions with the Hopper Architecture. As NVIDIA explains, dynamic programming is a technique that breaks down complex, recursive problems into simpler sub-problems.

Caching the solutions to these subproblems for continual, later use reduces time and space complexity.

With Hopper, NVIDIA's latest DPX instructions claim to allow for up to 40x performance improvement compared to standard CPUs and 7x improvements compared to Ampere GPUs.

Unpacking NVIDIA's GTC Conference

All in all, NVIDIA's new Hopper architecture leverages a series of new and exciting technologies to ensure high performance, security, and scalability for future HPC and AI infrastructure.

This year's GTC conference has given us a massive amount of new technology to uncover, like the latest Grace CPU "Superchip." It will be interesting to see more of this technology and where NVIDIA takes it in the near future.